Computers by the Decade

2021-03-31

I've been doing a lot of research into the history of computers1 for my forthcoming Middle Grade novel about robots (as of March 2021, I'm linking to a largely blank page here, which I assure you will one day be filled with the multitude of books I'm going to write - end Daily Affirmation now).

I'd like to share my current, wait for it, mental model for how I'm thinking about the evolution of computing over the decades. I'll also highlight some current companies that I find interesting because they seem to be fellow computer historians.

First, a caveat canem: 🐶 Since this website is my little corner of the dub-dub-dub, I reserve the right to update this framework as I learn more. And I will be learning more, because I can't stop reading about this stuff. I love it. So, there, take that, fellow computer historians! Even better, please let me know what I've messed up or got wrong so I can learn. Woof!

So, here's how I see the evolution of computers over the decades (including some predictions):

| Decade | Computers |

|---|---|

| 1940s | Analog |

| 1950s | Digital |

| 1960s | Warehouse |

| 1970s | Mini |

| 1980s | 8-Bit PC |

| 1990s | Pentium PC |

| 2000s | Laptop |

| 2010s | Smart Phones |

| 2020s | Wearables |

| 2030s | Embeddables |

Lemme recap these a bit (in a freewheeling manner). We see the first "stored-program" digital computers coming to life in the late 40s. These are your o.g. "Von Neumann" architecture machines, the real-world implementations of the universal Turing machine vision from 1936. Goodbye, Vannevar Bush's Differential Analyzer -- we've now got computers that we can "re-wire" themselves with "code" instead of electromechanical widgets and whatnot that had to be manually reconfigured for different problems (usually military applications around this time, like calculating missile trajectories).

Then comes the era of the ominious Warehouse computer, dominated by Big Blue (IBM) and the angry BUNCH (Burroughs, UNIVAC, NCR, Control Data Corporation, and Honeywell) -- aka the FAANG of days of computer future past. Computers were huge, literally, during the 60s and 70s. Actual insects would get lodged inside them and wreak havoc -- "bugs"! This was also around the time that people thought there might only need to be a handful of computers in the whole wide world -- that computers would be a public utility like telephone service.

Along related lines, this is where folks started experimenting with "computer networking." Let's layer that strand into our decades framework:

| Decade | Computers | Networking |

|---|---|---|

| 1940s | Analog | N/A |

| 1950s | Digital | N/A |

| 1960s | Warehouse | Timeshare |

| 1970s | Mini | ARPANET |

| 1980s | 8-Bit PC | Internet |

| 1990s | Pentium PC | WWW |

| 2000s | Laptop | Web 2.0 |

| 2010s | Smart Phones | Apps |

| 2020s | Wearables | TBD |

| 2030s | Embeddables | TBD |

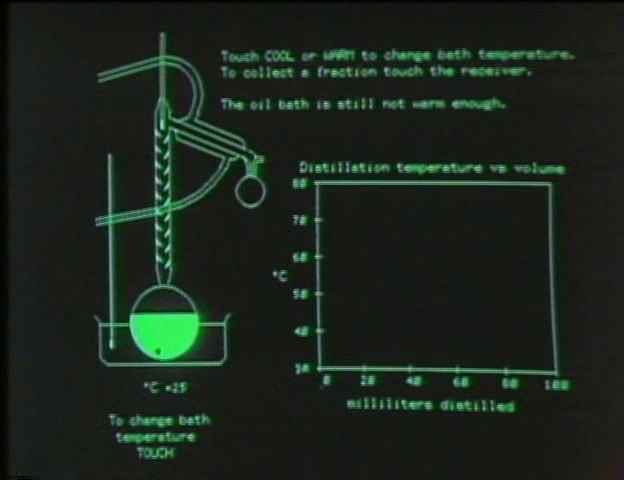

Timesharing. You've probably heard of it before. Essentially, the few people lucky enough to have access to these machines would sit at "dumb" Teletype terminals and their commands would be executed in some mainframe somewhere else (usually their university). You didn't have to own a computer to use one, you just needed to pay for access (sounds a lot like "cloud computing" nowadays, right? Time is a flat circle). It wasn't just all university-work. Games were being created and shared, and projects like PLATO at University of Illinois were doing interactive things that seem impossible for their era:

Take a look at the rows for 80s and 90s - you're basically looking at Halt and Catch Fire season by season.2 Personal computers. The World Wide Web. AOL Instant Messager. This is where things start hitting hard on the nostalgia receptors for me. I'll never forget the early `90s Christmas where we got our SoundBlaster 16 sound card. Carmen Sandiego never sounded so good.

Let's fast-forward to the new millenium. Frankly, I'm increasingly less interested in the more recent eras of apps and smart phones. Even my predictions are boring to me. I don't have an Apple Watch. I know "AR" is coming (see recent WWDC teaser). I know we're going to be able to read brainwaves of pigs soon (or already are). I should probably keep more of an open mind about these upcoming technologies, so I'll try. But the history major in me just wants to go back and play with my Apple IIe.

When I think about it, there's tons of additional "strands" that could and should be tracked in this framework. For example, the evolution of memory type and memory capacity over time is super fascinating, and probably is the number one driver of the changes in the "computers" column.

Or another idea, even closer to my heart, would be adding the "definitive" book that should be read for each era. Actually, let me try that one.

| Decade | Computers | Networking | Book |

|---|---|---|---|

| 1940s | Analog | N/A | |

| 1950s | Digital | N/A | Turing's Cathedral |

| 1960s | Warehouse | Timeshare | The Friendly Orange Glow |

| 1970s | Mini | ARPANET | The Soul of a New Machine |

| 1980s | 8-Bit PC | Internet | Return to the Little Kingdom |

| 1990s | Pentium PC | WWW | Hard Drive |

| 2000s | Laptop | Web 2.0 | |

| 2010s | Smart Phones | Apps | |

| 2020s | Wearables | TBD | |

| 2030s | Embeddables | TBD |

I need some suggestions for the more recent eras!

Three Companies That Seem to Also Appreciate Computer History

Okay, onto the best part...

Replit

Replit is a company that makes it easy to spin up an IDE in any language, right in your browser. Goodbye painful local dev environment setup. Students LOVE it. Teachers LOVE it. School administrators apparently DON'T LOVE it (according to what I've seen on Twitter).

So, what does Replit know about computer history?

They are the modern timesharing system - global, instant access to compute, with collaboration as first-class objective. It's a place to learn, play games, write your own games, build apps, ship stuff. They're even talking about building their own hardware "dumb" terminal that connects instantly to Replit.

We're prototyping a computer that boots to Replit and is hardwired with a VPN to make it impossible to block.

— Amjad Masad ⠕ (@amasad) March 20, 2021

If it works, it will be free for students. Devs will pay full price with each computer sold will sponsor a student. https://t.co/8cQdhJqvFv pic.twitter.com/Qu1eTYYa1x

Timesharing is back. And this time you don't have to share, unless you want to!

Tailscale

Tailscale is a company that makes it dead simple to set up a VPN for all your devices: phones, Raspberry Pis, laptops, desktops, you name it.

So, what does Tailscale know about computer history?

They are making the 90s-LAN party possible again. It was a beautiful thing when you could "easily" make your computers talk to each other, but then the Internet got scary and hard, and Tailscale makes it safe and easy again.

I recently used Tailscale to set up a little Raspberry Pi-powered robot car. I can SSH into my little robot and drive it around, and I'm feeling like a kid again.

Oxide Computer Company

Oxide Computer Company is a new computer company. Like hardware-hardware computer company! They're building servers for folks who don't want to (or can't) just use AWS, GCP, Azure, Digital Ocean, blah blah... the cloud.

So, what does Oxide know about computer history?

More than me! Just listen to their epic podcast On the Metal to hear for yourself. They even went through a fun design project of redesigning their logo to look like definitive computer companies of days past..

But most importantly I think that Oxide harkens back to the `90s era of owning your compute infra end-to-end. We do not have to accept that everything will be in the cloud. Maybe your closet is a better choice. Tradeoffs, amiright? There are going to be even more cases in the future where local compute is needed (e.g. how about the Moon or Mars?!)

Computer historian

In conclusion:

By trade, I'm a software engineer. By spirit, I'm a computer historian. By George, I'm trying to combine the two (just like these three companies).

Footnotes

I'm kinda showing off something neat with this link -- linkable searching using query-params for my Library book list. I'm proud of this lil' quality of life feature for my site. If you're one of those people who like to strip query params from links, by all means do so, but if you want to share a list of the books I've read about Ancient Rome (why would you want to do this if you're not me?), keep 'em in the link.

Watch this show. If you love computers, watch this show. If you love great characters making terrible decisions, watch this show. And then watch it again using this college-course level syllabus by Ashley Blewer.